Apple announces raft of accessibility updates to enhance independence for disabled people – AT Today

Technology giant Apple has announced new accessibility features coming later this year that are designed to make its products easier to navigate for disabled people.

Key assistive features include eye-tracking technology for tablets and smartphones, the ability to perform tasks through making custom sounds, and new ways for people with hearing impairments to experience music on smartphones.

“Each year, we break new ground when it comes to accessibility,” said Sarah Herrlinger, Apple’s senior director of Global Accessibility Policy and Initiatives. “These new features will make an impact in the lives of a wide range of users, providing new ways to communicate, control their devices, and move through the world.”

Eye Tracking

Powered by AI, Eye Tracking gives users a built-in option for navigating iPad, Apple’s tablet, and iPhone, Apple’s smartphone, with their eyes.

Designed for users with physical disabilities, Eye Tracking uses the front-facing camera to set up and calibrate in seconds. All data used to set up and control this feature is kept securely on device and is not shared with Apple.

Eye Tracking works across iPad and iPhone apps. It does not require additional hardware or accessories.

With Eye Tracking, users can navigate through the elements of an app and use Dwell Control to activate each element, accessing additional functions such as physical buttons, swipes, and other gestures solely with their eyes.

Music Haptics

iPhone users who are deaf or hard of hearing can experience music in a new way with Music Haptics. With this accessibility feature turned on, the Taptic Engine in iPhone plays taps, textures, and refined vibrations to the audio of the music. Music Haptics works across millions of songs in the Apple Music catalogue.

New features for a wide range of speech

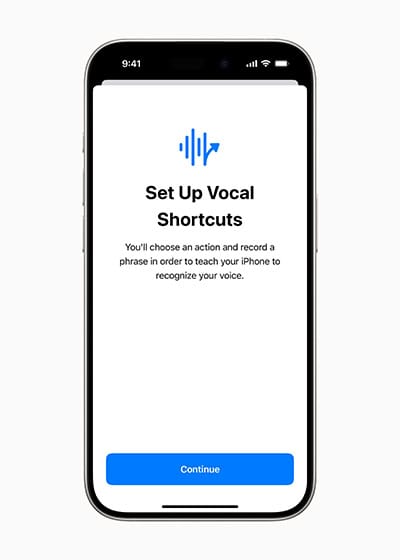

With Vocal Shortcuts, iPhone and iPad users can assign custom utterances that Siri, Apple’s digital assistant that responds to voice commands, can understand to launch shortcuts and complete complex tasks.

Apple has also newly launched Listen for Atypical Speech. This feature gives users an option for enhancing speech recognition for a wider range of speech. It uses on-device machine learning to recognise user speech patterns.

Designed for users with acquired or progressive conditions that affect speech, such as cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke, these features provide customisation and control.

“Artificial intelligence has the potential to improve speech recognition for millions of people with atypical speech, so we are thrilled that Apple is bringing these new accessibility features to consumers,” commented Mark Hasegawa-Johnson, the Speech Accessibility Project at the Beckman Institute for Advanced Science and Technology at the University of Illinois Urbana-Champaign’s principal investigator.

“The Speech Accessibility Project was designed as a broad-based, community-supported effort to help companies and universities make speech recognition more robust and effective, and Apple is among the accessibility advocates who made the Speech Accessibility Project possible.”

CarPlay gets accessibility updates

CarPlay, which allows users to safely use iPhone features while driving, is getting new accessibility features: Voice Control, Color Filters, and Sound Recognition.

With Voice Control, users can navigate CarPlay and control apps with just their voice. With Sound Recognition, drivers or passengers who are deaf or hard of hearing can turn on alerts to be notified of car horns and sirens. For users who are colourblind, Color Filters make the CarPlay interface visually easier to use, with additional visual accessibility features including Bold Text and Large Text.

Accessibility features coming to visionOS

This year, accessibility features coming to visionOS, the operating system for Apple’s mixed reality headset Vision Pro, will include systemwide Live Captions to help everyone — including users who are deaf or hard of hearing — follow along with spoken dialogue in live conversations and in audio from apps.

Apple Vision Pro will add the capability to move captions using the window bar during Apple Immersive Video, as well as support for additional Made for iPhone hearing devices and cochlear hearing processors.

Updates for vision accessibility will include the addition of Reduce Transparency, Smart Invert, and Dim Flashing Lights for users who have low vision or those who want to avoid bright lights and frequent flashing.

“Apple Vision Pro is without a doubt the most accessible technology I’ve ever used,” said Ryan Hudson-Peralta, a Detroit-based product designer, accessibility consultant, and cofounder of Equal Accessibility. “As someone born without hands and unable to walk, I know the world was not designed with me in mind, so it’s been incredible to see that visionOS just works. It’s a testament to the power and importance of accessible and inclusive design.”

Apple also announced some smaller additional accessibility updates, which can be found here.